What is Regularization

Regularization is a technique that helps reduce overfitting or reduce variance in Neural network by penalizing for complexity. It is a technique that penalizes for relatively large weights in our model.

Learn more about Overfitting and Variance here https://marko-kovacevic.com/blog/bias-and-variance-in-machine-learning/ .

L2 Regularization

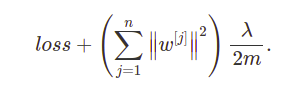

L2 regularization is the most common Regularization technique. It is implemented by adding a term to Loss function. Term penalizes for large weights.

Loss formula with implemented L2 Regularization is calculated by this formula:

| Variable | Definition |

|---|---|

| n | Number of layers |

| w[j] | Weight matrix for the j-th layer |

| m | Number of inputs |

| λ | Regularization parameter |

Regularization parameter ( λ ) is another hyperparameter that is used for tuning. If λ is large then it will incentivized to make the weights small. If weights are smaller then our model will be simpler.

Programming implementation

Keras:

dropout_model = tf.keras.Sequential([

layers.Dense(512, activation='elu', input_shape=(FEATURES,))

layers.Dense(512, activation='elu', kernel_regularizer=regularizers.l2(0.3),

layers.Dense(1)

])Dropout Regularization

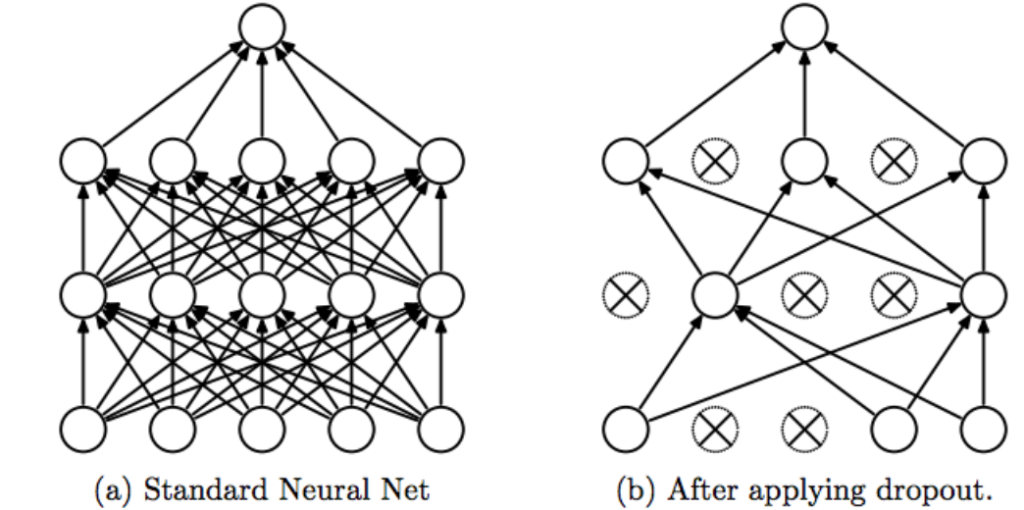

Dropout Regularization randomly ignore some subset of nodes in a given layer during training. It drops nodes from the layer.

Dropout, applied to a layer, consists of randomly “dropping out” (i.e. set to zero) a number of output features of the layer during training. Let’s say a given layer would normally have returned a vector [0.2, 0.5, 1.3, 0.8, 1.1] for a given input sample during training, after applying dropout, this vector will have a few zero entries distributed at random, e.g. [0, 0.5, 1.3, 0, 1.1].

Dropout rate is parameter for dropping nodes, it is number between 0 and 1. The higher Dropout rate it will drop more nodes.

- 0.0 – No dropout regularization

- 1.0 – Drop out everything, the model learns nothing

- Values between 0.0 and 1.0 – More useful

Programming implementation

Keras:

dropout_model = tf.keras.Sequential([

layers.Dense(512, activation='elu', input_shape=(FEATURES,)),

layers.Dropout(0.5),

layers.Dense(512, activation='elu'),

layers.Dropout(0.5),

layers.Dense(512, activation='elu'),

layers.Dropout(0.5),

layers.Dense(512, activation='elu'),

layers.Dropout(0.5),

layers.Dense(1)

])https://www.tensorflow.org/tutorials/keras/overfit_and_underfit#add_dropout

L2 + Dropout regularization

L2 and Dropout regularizations can be combined and often give very good results.

Programming implementation

Keras:

combined_model = tf.keras.Sequential([

layers.Dense(512, kernel_regularizer=regularizers.l2(0.0001),

activation='elu', input_shape=(FEATURES,)),

layers.Dropout(0.5),

layers.Dense(512, kernel_regularizer=regularizers.l2(0.0001),

activation='elu'),

layers.Dropout(0.5),

layers.Dense(512, kernel_regularizer=regularizers.l2(0.0001),

activation='elu'),

layers.Dropout(0.5),

layers.Dense(512, kernel_regularizer=regularizers.l2(0.0001),

activation='elu'),

layers.Dropout(0.5),

layers.Dense(1)

])https://www.tensorflow.org/tutorials/keras/overfit_and_underfit#combined_l2_dropout

Data augmentation

Getting more data can reduce Overfitting. Data augmentation is Regularization method that reduce overfitting by augmenting the dataset. If dataset is limited then you can get more data from existing dataset by deriving new data based on old ones.

Like wedding photography artists you could derive new image by image mirroring or you could just zoom and rotate image.

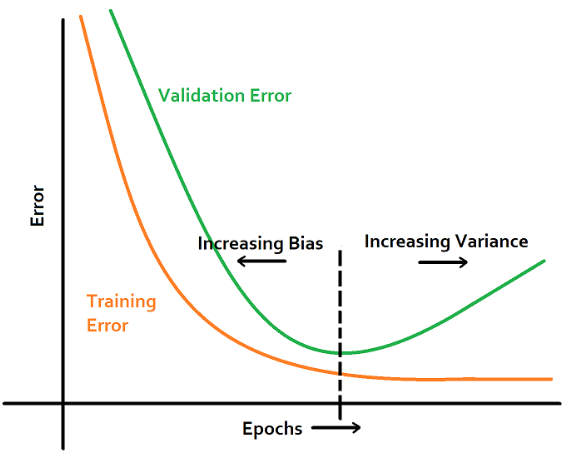

Early stopping

Early stopping is regularization method that stops training when training error and test error are the smallest but also very similar and keeps weigts from that stopped iteration.

Thanks for reading this post.

References

- Coursera. 2020. Regularization – Practical Aspects Of Deep Learning | Coursera. [online] Available at: <https://www.coursera.org/learn/deep-neural-network/lecture/Srsrc/regularization> [Accessed 29 May 2020].

- Coursera. 2020. Dropout Regularization – Practical Aspects Of Deep Learning | Coursera. [online] Available at: <https://www.coursera.org/learn/deep-neural-network/lecture/eM33A/dropout-regularization> [Accessed 30 May 2020].

- Coursera. 2020. Other Regularization Methods – Practical Aspects Of Deep Learning | Coursera. [online] Available at: <https://www.coursera.org/learn/deep-neural-network/lecture/Pa53F/other-regularization-methods> [Accessed 30 May 2020].

- Deeplizard.com. 2020. Regularization In A Neural Network Explained. [online] Available at: <https://deeplizard.com/learn/video/iuJgyiS7BKM> [Accessed 29 May 2020].

- TensorFlow. 2020. Overfit And Underfit | Tensorflow Core. [online] Available at: <https://www.tensorflow.org/tutorials/keras/overfit_and_underfit#combined_l2_dropout> [Accessed 30 May 2020].

- mc.ai. 2020. Why “Early-Stopping” Works As Regularization?. [online] Available at: <https://mc.ai/why-early-stopping-works-as-regularization/> [Accessed 30 May 2020].