Learning rate schedules are techniques used to adjust Learning rate during training by predefined schedule.

See what is Learning rate here https://marko-kovacevic.com/blog/learning-rate-in-deep-learning/

Popular Learning rate schedules techniques are:

- Step decay

- Exponential decay

- Time decay

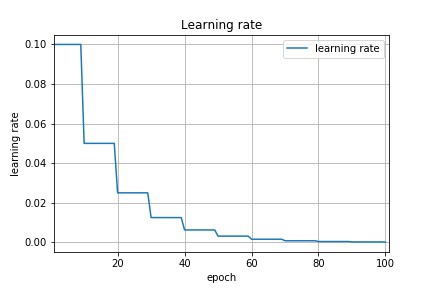

1. Step Decay

Step decay reduce the Learning rate by predefined value after predefined epochs.

Usually Learning rate is reduces by a half every 5 epochs or by 0.1 every 20 epochs. These numbers depend on the type of problem.

1.1. Mathematical Implementation

lr = lr0 * d^floor( (1 + t) / r) lr - learning rate lr0 - initial learning rate d - decay parameter (how much the learning rate should change at each drop) t - iteration number r - how often the rate should be dropped (10 corresponds to a drop every 10 iterations)

1.2. Programming Implementation

Keras:

def step_decay(epoch):

initial_lrate = 0.1

drop = 0.5

epochs_drop = 10.0

lrate = initial_lrate * math.pow(drop,

math.floor((1+epoch)/epochs_drop))

return lrate

lrate = LearningRateScheduler(step_decay)https://keras.io/callbacks/#learningratescheduler

LearningRateScheduler is callback function that allows you freedom to define your custom Learning rate schedule.

With callbacks you can customize the behavior of a Keras model during training.

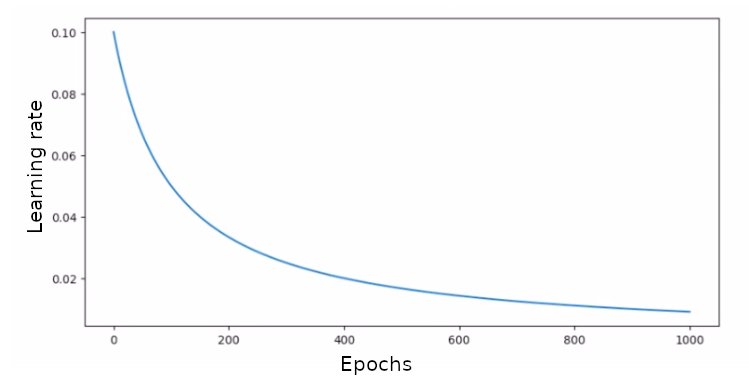

2. Exponential Decay

Exponential Decay reduce the Learning rate by folowing an exponential curve.

2.1. Mathematical Implementation

lr=lr0*e^(−d*t) lr - learning rate lr0 - initial learning rate e - Euler's number (it is 2.71828) d - decay parameter (how much the learning rate should change at each drop) t - iteration number

2.2. Programming Implementation

Tensorflow:

tf.compat.v1.train.exponential_decay(

learning_rate, global_step, decay_steps, decay_rate, staircase=False, name=None

)https://www.tensorflow.org/api_docs/python/tf/compat/v1/train/exponential_decay

Keras:

tf.keras.optimizers.schedules.ExponentialDecay( initial_learning_rate, decay_steps, decay_rate, staircase=False, name=None )

https://www.tensorflow.org/api_docs/python/tf/keras/optimizers/schedules/ExponentialDecay

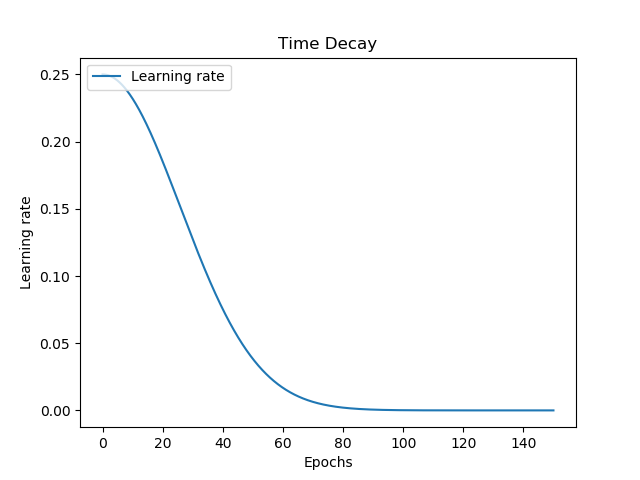

3. Time Decay

TIme decay reduce the Learning rate by time that was elapsed. Time is number of elapsed iterations.

Time decay is also called 1/t decay (Inverse time decay).

3.1. Mathematical Implementation

lr=lr0/(1+d*t) lr - learning rate lr0 - initial learning rate d - decay parameter (how much the learning rate should change at each drop) t - iteration number

3.2. Programming Implementation

Tensorflow:

tf.compat.v1.train.inverse_time_decay( learning_rate, global_step, decay_steps, decay_rate, staircase=False, name=None )

https://www.tensorflow.org/api_docs/python/tf/compat/v1/train/inverse_time_decay

Keras:

tf.keras.optimizers.schedules.InverseTimeDecay( initial_learning_rate, decay_steps, decay_rate, staircase=False, name=None )

https://www.tensorflow.org/api_docs/python/tf/keras/optimizers/schedules/InverseTimeDecay

Conclusion

Learning Rate Schedules are good techniques for updating Learning rate during training. It is better using them then using constant Learning rate (without updating Learning rate during training) because it will get faster minimum loss and it will have less chance to overshoot minimum loss.

Of all these Learning Rate Schedules techniques, most preferable is Step decay because it is easy to understand his parameters during training.

In addition to Learning Rate schedules techniques, there are Adaptive learning rate techniques which is better, learn about them here https://marko-kovacevic.com/blog/adaptive-learning-rate-in-deep-learning/ .

Thanks for reading this post.

References

- Cs231n.github.io. 2020. Stanford University – Cs231n Convolutional Neural Networks For Visual Recognition. [online] Available at: <https://cs231n.github.io/neural-networks-3/> [Accessed 26 April 2020].

- En.wikipedia.org. 2020. Learning Rate. [online] Available at: <https://en.wikipedia.org/wiki/Learning_rate> [Accessed 26 April 2020].

- Medium. 2020. Learning Rate Schedules And Adaptive Learning Rate Methods For Deep Learning. [online] Available at: <https://towardsdatascience.com/learning-rate-schedules-and-adaptive-learning-rate-methods-for-deep-learning-2c8f433990d1> [Accessed 26 April 2020].