1. What is GPU

GPU (Graphics Processing Unit) is chip designed to handle Graphics in computing environments. GPU is designed to perform tasks that involve simultaneous computations.

2. Difference between GPU and CPU

CPU (Central Processing Unit) can have 8/16/32/64 cores while GPU can have thousands of cores which is much more than a CPU have. However, GPU run at lower speeds then CPU, but even though they are slower, together they perform much faster computations then CPU.

3. How GPU can be used in Deep learning

Artificial neural networks (ANN) are created from large numbers of identical Artificial neurons and they are highly parallel. Artificial neural networks can have from 1 million to well over billion parameters and they need to be adjusted by computing during training. They also require a large amount of training data to achieve high accuracy, meaning hundreds of thousands to millions of input samples will have to be run through both a forward and backward pass and these are huge amounts of computation.

Using GPU for ANN training can powerfully speed up training by parallel computations. Large number of GPU cores will perform calculations in parallel.

Training will be the fastest if multi-GPU cluster is used. Multi-GPU cluster is tehnique where several GPUs are combined in one computer and those GPUs compute tasks parallel.

4. How much training is faster with GPU

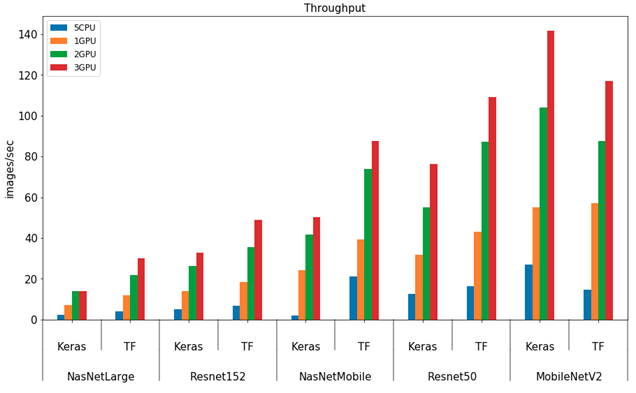

Microsoft was doing research where it is compared 5 CPUs and 3 GPU and found that 3 GPUs are faster then 5 CPU clusters by 804% ( https://azure.microsoft.com/en-us/blog/gpus-vs-cpus-for-deployment-of-deep-learning-models/ ).

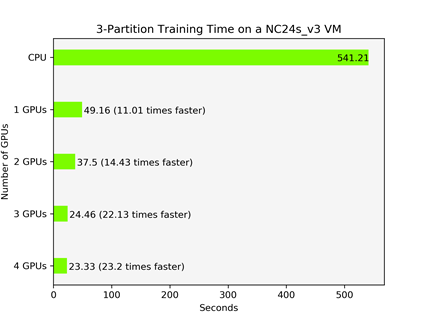

Microsoft was also doing another research and found that 4 GPU can be faster 23.2 times then CPU with Gradient boosted decision tree ( https://azure.microsoft.com/en-us/blog/azure-machine-learning-service-now-supports-nvidia-s-rapids/ ).

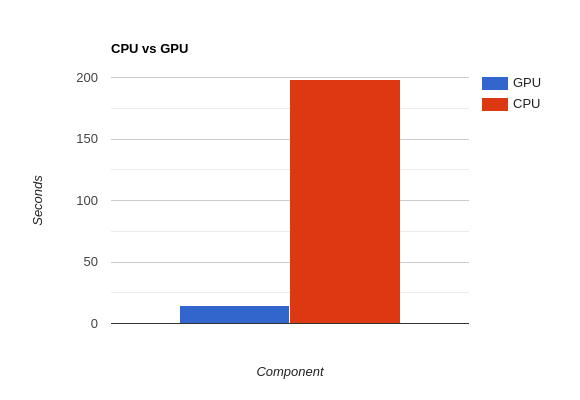

I was also doing researh in Deep learning with GPU and result was that training without GPU elapsed 198.2 seconds and training with GPU elapsed 14.4 seconds which is 13,76x faster. For research was used Google Colaboratory environment (Nvidia K80s, T4s, P4s and P100s) for text classification task.

5. Programming Implementation

5.1. Single GPU

Tensorflow will transparently run on a single GPU with no code changes required.

You can print tf.config.experimental.list_physical_devices(‘GPU’) to confirm that TensorFlow is using the GPU.

5.2. Multiple GPU

The best practice for using multiple GPUs is to use tf.distribute.Strategy. Here is a simple example:

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

inputs = tf.keras.layers.Input(shape=(1,))

predictions = tf.keras.layers.Dense(1)(inputs)

model = tf.keras.models.Model(inputs=inputs, outputs=predictions)

model.compile(loss='mse',

optimizer=tf.keras.optimizers.SGD(learning_rate=0.2))This code will run a copy of your model on each GPU, splitting the input data between them, also known as “data parallelism”.

https://www.tensorflow.org/guide/gpu#using_multiple_gpus

6. Conclusion

GPUs can speed up training in Deep learning very well by parallel computations. If less time is needed for training it can be added more data for training to make predictions more accurate.

It is better and cheaper to pay for Xeon CPU and good GPU like NVidia V100 then only to pay for CPU because you will pay for 30 units of time for only CPU but for CPU and GPU you will pay only 1 unit of time.

Thanks for reading this post.

7. References

- NVIDIA Developer. 2020. Artificial Neural Network. [online] Available at: <https://developer.nvidia.com/discover/artificial-neural-network> [Accessed 1 May 2020].

- Azure.microsoft.com. 2020. Azure Machine Learning Service Now Supports NVIDIA’S RAPIDS. [online] Available at: <https://azure.microsoft.com/en-us/blog/azure-machine-learning-service-now-supports-nvidia-s-rapids/> [Accessed 1 May 2020].

- MissingLink.ai. 2020. The Complete Guide To Deep Learning With Gpus – Missinglink.Ai. [online] Available at: <https://missinglink.ai/guides/computer-vision/complete-guide-deep-learning-gpus/> [Accessed 1 May 2020].

- Azure.microsoft.com. 2020. Gpus Vs Cpus For Deployment Of Deep Learning Models. [online] Available at: <https://azure.microsoft.com/en-us/blog/gpus-vs-cpus-for-deployment-of-deep-learning-models/> [Accessed 1 May 2020].

- TensorFlow. 2020. Use A GPU | Tensorflow Core. [online] Available at: <https://www.tensorflow.org/guide/gpu> [Accessed 2 May 2020].